I recently had to deal with a lot of archived Windows Security Logs (evtx files) spanning a fairly lengthy period of time. The evtx binary was introduced with Windows Vista and can be found on all modern version of windows. The

author of EVTX Parser has posted his work on documenting the evtx file structure

here and has created a utility called EVTX Parser that will parse evtx binaries and store them as xml. A good overview of his research and tool is posted in a slide deck from the

SANS Forensic Summit in 2010.

There are a few additional free tools available to search and filter Windows event logs if you don't have a log management product. While the Windows event log supports the import of multiple evtx files, I can tell you through experience that the MMC will puke if you feed it a large amount of files. Moreover, there is limited

support for many of the

xpath string functions such as "contains" and "starts-with" which can be hindrance. All the same, I managed to come up with some useful expressions to query Object Access logs from Windows 7 and 2008 R2 Server.

Microsoft provides a decent

spreadsheet on Windows Security Event ID's and some

documentation on the schema of events. Looking at the XML of a few events, however, will certainly give you what you need.

When dealing with object access logs, you are going to need to distinguish between the types of access granted on the file system and registry. After much googling and experimentation I managed to scrape together the following

Access Mask values and their associated bit wise equivalents used in the Windows Event log. These are the permissions that were exercised on the audited object(s).

1537 (0x10000) = Delete

4416 (0x1) = ReadData(or List Directory)

4417 (0x6) = WriteData(or Add File) (0x2 on Windows 2008 Server)

4418 (0x4) = AppendData (or AddSubdirectory)

4432 (0x1) = Query Key Value

4433 (0x2) = Set Key Value

4434 (0x4) = Create Sub Key

So for example if you need to write and expression to see all successful and failed modifications by a particular user on files and folders.

<querylist>

<query id="0" path="Security">

<select path="Security">*[EventData[Data[@Name='SubjectUserName']='bugbear' and [@Name='AccessMask']='0x6']]</select>

</query>

</querylist>

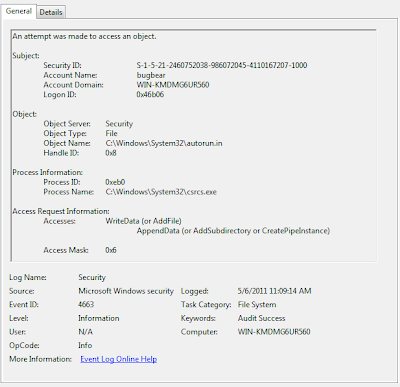

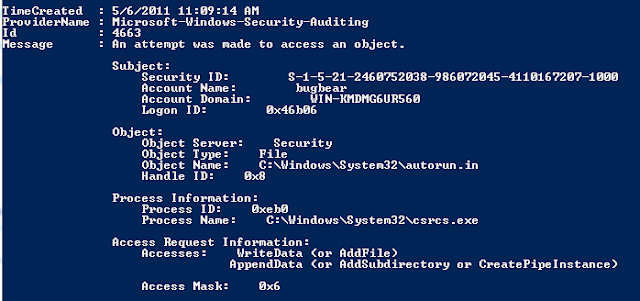

After playing with different variations of this query, I began to get creative during dynamic analysis of the Renocide worm and its effects on the System32 and HKLM registry keys. After enabling auditing on both objects, I came up with the following query to produce all changes made by the payload and malicious process. Note: the syntax when working with an externally saved evtx file.

<querylist>

<query id="0" path="file://C:\Worm.evtx">

<select path="file://C:\Worm.evtx">*[System[Provider[@Name='Microsoft-Windows-Security-Auditing'] and EventID=4663 and (Task = 12800 or Task = 12801)] and EventData[Data[@Name='ProcessName']='\Device\HarddiskVolume2\02MAY2011\scffog.exe' or Data='C:\Windows\System32\csrcs.exe']]</select>

</query>

</querylist>

This produced some interesting logs I used for further analysis.

If filtering multiple archived evtx files you can import the files into the mmc event viewer, create a view including them, and filter on that view. But dont expect to be able to work with a large amount of data. In fact, Microsoft will generate a warning if you attempt to import more than ten evtx files. Fortunately, there are faster and more flexible alternatives.

Microsoft Log Parser will parse the binary (specify evt as the input type). Specifying a wild card in the filename will parse multiple files located in a specified folder and Log Parser also provides additional flexibility by allowing the use of statements such as "LIKE". The following are valid data fields that can be used when parsing evt/evtx binaries.

Note: If filtering by user you will need to use the SID and much of the event data, such as access masks, are combined as a string in the "Message" data field. The following is an example of a query that will pull events from multiple evtx binaries that contain the specified WriteData and Delete Access Mask values.

LogParser.exe -i:evt -o:csv "Select * from C:\Logs\*.evtx where EventID=4663 and (Message Like '%Access Mask: 0x6%' or Message Like '%Access Mask: 0x10000%')" > C:\Logs\Out.csv

Another alternative is Windows Powershell. The following is a similar example as the one given above (all WriteData and Delete Access Masks) using the

Get_WinEvent and

Where_Object Cmdlet'.

get-winevent -path "C:\Logs\Comp1.evtx", "C:\Logs\Comp2.evtx" | where {$_.Id -eq "4663" -and $_.message -like "*0x10000*" -or $_.Id -eq 4663 -and $_.message -like "*0x6*"} > C:\Logs\Out.csv

Using "| Format-List" provides a view of the data fields available for use with the "Where" statement.

While not ideal, the IT Practicioner or Incident Responder can certainly wrangle with evtx files without a SIEM or Log management system. The recent release of the

Verizon DBIR report (2011) included a statement on page 60 that notes an interesting but not unexpected finding.

"...discovery through log analysis and review has dwindled down to 0%. So the good news is that things are only looking up from here..." - Verizon DBIR 2011

Happy Hunting!

Updated May 19, 2011

I intentionally did not provide any detail on enabling Object Access auditing in Windows since there is a fair amount of documentation available on that. In retrospect, however, I did want to mention a few things and share a few tips.

First, choose what Accesses you audit carefully. Accesses such as "List Folder/Read Data" are very noisy and will only increase the amount of logs you have to parse and may fill up the event log completely so it begins to overwrite itself (note: there are settings for the size of the log too).

Second consider what user or group you audit access for carefully. The "Users" group may be fine for auditing access to files stored on a file server but consider using the "Everyone" group if auditing changes made by malicious code. This group will include the System account.

Lastly, enabling auditing of changes to the system folders or registry may become resource intensive and non-manageable in a production environment. Use with caution. That said, I do believe it can be useful during analysis of malicious code. I would include a few more locations than just the System32 and HKLM however. The C:\Users, C:\ProgramData, and HKCU keys come to mind.