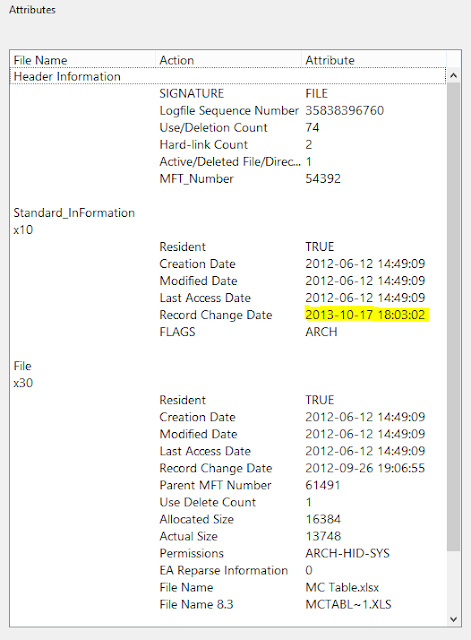

Recently, I dealt with an infection and during forensic analysis noted that the NTFS Master File Table $SI Creation and Modified dates remained unchanged on files encrypted. I made a note of this for later and circled back around during post analysis.

The result was I was able to identify over 4400 files encrypted on the file share. Not bad for an infection that only lasted a few hours before being caught by the most recent Antivirus signature. Load up the backup tapes boys!

Happy Hunting!

Updated November 27, 2013 2:15 PM

After exchanging a few emails with some people in the industry, I think what we are seeing here is an example of File System Tunneling. To be specific, if a file is removed and replaced with the same file name (in the same folder) of a NTFS drive within fifteen seconds (default with NTFS) it will retain the original NTFS attributes. I have seen this before with other Trojans as a way to avoid detection. Just an educated hunch. More info on File System Tunneling can be found here. Thanks to all who responded to me.

Updated November 27, 2013 3:00 PM

Just a quick update of some of the IOC's (Indicators of Compromise: MD5, SHA1, Location) for this particular variant;

2a790b8d3da80746dde3f5c740293f3e 7d27c048df06b586f43d6b3ea4a6405b2539bc2c \\.\PHYSICALDRIVE1\Partition 2 [305043MB]\NONAME [NTFS]\[root]\ProgramData\Symantec\SRTSP\Quarantine\APEA53C866.exeI wanted to also comment on using software restriction policies in Windows to block executable's from running from locations such as C:\Users\%userprofile%\AppData\Local\Temp. With no local admin rights, users only have the ability to write to three locations on modern versions of Windows (by default). Thee are;

f1e2de2a9135138ef5b15093612dd813 ea64129f9634ce8a7c3f5e0dd8c2e70af46ae8a5 \\.\PHYSICALDRIVE1\Partition 2 [305043MB]\NONAME [NTFS]\[root]\Users\%userprofile%\AppData\Local\Temp\e483.tmp.exe

714e8f7603e8e395b6699cea3928ac81 36f40d0be83410e911a1f4231eeef4e863551cee \\.\PHYSICALDRIVE1\Partition 2 [305043MB]\NONAME [NTFS]\[root]\Users\%userprofile%\AppData\Roaming\dhsjabss\dhsjabss

17610024a03e28af43085ef7ad5b65ba 77f9d6e43b8cb1881396a8e1275e75e329ca7037 \\.\PHYSICALDRIVE1\Partition 2 [305043MB]\NONAME [NTFS]\[root]\Users\%userprofile%\AppData\Roaming\dhsjabss\egudsjba.exe

621f35fd095eff9c5dd3e8c7b7514c1e f03233e323f9a49354f2d6c565b6ec95595cc950 \\.\PHYSICALDRIVE1\Partition 2 [305043MB]\NONAME [NTFS]\[root]\Users\%userprofile%\Desktop\Iqbcxbvszzgdxjvbp.bmp

C:\$Recycle.Bin

C:\ProgramData

C:\Users\%Userprofile%

The attackers know this and 99% of infections I see in my environment are using these locations efficiently (including this one).

Unfortunately, a lot of legitimate software also use these locations. So using, suggestions such as Software Restriction Policies, to stop the execution from these locations in a large enterprise environment may or may not be realistic. I suspect adding rules, to check if the executable is legitimately signed, would reduce false positives. I am, however, seeing malicious code signed on occasion. In conclusion, there is no silver bullet here but I personally plan to explore these defenses more and will update what I find as I do.

Lastly, some online posts of this malware has mentioned the use of the HKEY_CURRENT_USER\Software\CryptoLocker location in the Windows registry as a way to determine what files have been encrypted. I just wanted to mention, that I did carve the ntuser.dat file from the compromised system and noted that this location did exist in the registry. It however, did not contain any entries on what files were encrypted.

Updated December 05, 2013 3:00 PM

Since Michael Mimosa over at Threat Post was kind enough to link back to my post, I thought I would return the favor. Forensics Method Quickly Identifies CryptoLocker Encrypted Files